I’m fascinated by the fine line between what I call a Fanocracy and a cult. A Fanocracy is when people are attracted to a tribe of like-minded people, be it a team they support, a sport they enjoy playing, a rock band or author they love, or a company that treats them right.

I’m fascinated by the fine line between what I call a Fanocracy and a cult. A Fanocracy is when people are attracted to a tribe of like-minded people, be it a team they support, a sport they enjoy playing, a rock band or author they love, or a company that treats them right.

It’s the genuine human connections when fans share their experiences because they’re motivated, inspired, and excited.

Things veer off into a cult when particular language is used, falsehoods are employed, and when leaders use coercion to keep people in line.

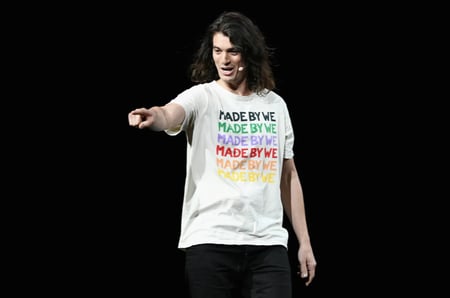

Adam Neumann, founder and former CEO of WeWork, managed to build a hype machine that lured employees and investors into an ultimately unsustainable business. The new book The Cult of We: WeWork, Adam Neumann, And The Great Startup Delusion is an excellent analysis of what went wrong at the company that was once valued at $47 billion.

Adam Neumann, founder and former CEO of WeWork, managed to build a hype machine that lured employees and investors into an ultimately unsustainable business. The new book The Cult of We: WeWork, Adam Neumann, And The Great Startup Delusion is an excellent analysis of what went wrong at the company that was once valued at $47 billion.

The Cult of We is written by Wall Street Journal reporters Eliot Brown and Maureen Farrell who covered the company for years as it was growing. They dive into Neumann’s bizarre world of marijuana-fueled parties and private jet travel and his remarkable ability to build a cult-like atmosphere around himself and his company.

Neumann’s storytelling gifts, often using cultish language, made investors believe the notion that his office sharing startup company should be valued by the market not as simple real estate company with traditionally low valuations, but rather as a software company with high multiples. That led to a hunger for funding at ever higher valuations to sustain the cult and there were many VCs willing to jump in.

Billions were lost when the Neumann party inevitably fizzled out.

Another new book of note is Cultish: The Language of Fanaticism by Amanda Montell.

Another new book of note is Cultish: The Language of Fanaticism by Amanda Montell.

Montell analyzes the social science of cult influence: how cultish groups from Jonestown and Scientology to SoulCycle and social media gurus use language as the ultimate form of power.

Montell argues that it’s the language being used that important, and that same language is in use everywhere she says, in “modern start-ups, Peloton leaderboards, and Instagram feeds”.

Cultish is particularly fascinating to me because the ideas in the book help to explain the allure of modern conspiracy theories such as those associated with QAnon and Anti-Vaxxers.

It’s interesting to learn from Montell how language becomes a device to move people from fandom to a supporter of a cult.

The danger when cults meet the Facebook algorithm

As I have written before, I believe the Facebook algorithm to be the most destructive technology ever invented.

When the destructive nature of the Facebook algorithm meets the language of a cult, many people are sucked into the resulting vortex of misinformation.

With AI-powered social networks like Facebook, users see more of the information they interact with. That becomes super dangerous when what’s being shared on Facebook uses cultish language.

Are you a bird watcher who joins a few birder groups, clicks “like” on the cute short-eared owl photo from your friend, searches on nearby bird sanctuaries, and shares a video of a majestic egret in flight? Bingo! Facebook will show you more and more bird-related content.

Just like the way that Netflix and similar services amplifies the kinds of movies and shows we watch, prompting us to watch more of the same, Facebook and other social networking services show us the same kind of information in our news feed that we click on, encouraging us to view more.

However, there are massive dangers to both individuals and society with this technology.

If somebody clicks on a sensational headline once because they are curious, the Facebook feed learns a little bit from that interaction and the AI serves up similar stories. If that new content is also viewed, then the system serves up more and more similar content. For this reason, Facebook amplifies the false information and polarizing content of cults because it’s what the systems have been trained to do!

There is a very real problem to global society because of the destructive aspects of the Facebook algorithm. It leads tens of millions of Facebook’s 2.7 billion global users into an abyss of misinformation, a quagmire of lies, and a quicksand of conspiracy theories.

As I argue in Fanocracy, creating fans as a great way to build a business. But it’s also important for us to be aware that fandom can veer off into the destructive nature of a cult and technologies like those from Facebook amplify the negative aspects.

Adam Neumann image via Vanity Fair